Artificial Intelligence

The game-changer that may soon be out of our control

By Yaodong Gu

C ollege students highly praised Chat-GPT and said they are looking forward to the next iteration of generative artificial intelligence (AI). However, AI experts are worried that the growth of AI will lead to increase cases of academic plagiarism, elimination of jobs, and uncontrollable long-term impacts.

In the past few months, AI has dominated the media headlines and attracted the world’s attention. Among them, Chat-GPT was one of the most mentioned terms. It is an AI chatbot trained by OpenAI, an American AI company that was founded in 2015 and released in November 2022. Since its release, Chat-GPT has become a worldwide sensation and rapidly surpassed one billion monthly users within only two months.

Chat-GPT Improves Text Tasks Productivity

“I have been using it to figure things like quite easily instead of searching Google, which gives you suggestions of different websites,”

– Khaled Ahmed Culshaw

Khaled Ahmed Culshaw is a freshman at University of Hong Kong (HKU) majoring in biological sciences.

As a biology student, one of Culshaw’s daily tasks includes reading essays and learning how to use biotechniques. Culshaw said that he is satisfied that there is now a resource that can help him improve his efficiency when he works on text tasks.

“Let’s say in perspective of writing and explaining your document in a better and more organised way, it is a very good source to learn from and also utilise for your own benefits,” he said.

Rahman Ave, a freshman at HKU majoring in engineering, agreed with Culshaw’s opinion.

“I do agree with him that it can help us optimise our tasks,” he said. “I’m not saying use it for plagiarism because it is pretty easy to get caught, so of course not. But I do use them for like, citations and explanations, like basically how you use the Internet for studying.”

Shakked Noy and Whitney Zhang, two MIT PhD students, published an essay focusing on the productivity effects of Chat-GPT. They found that AI can significantly improve the work effectiveness of writing and editing tasks.

Noy and Zhang invited 444 subjects to join their online experiment and asked them to complete two occupation-specific, incentivized writing tasks. The test-takers were generally from media agencies, publishers, consulting firms, data teams and human resource departments. They were asked to complete writing tests based on their own occupations, such as writing press releases, short reports, analysis plans and delicate emails.

Looking at the experiment’s results, the team that used Chat-GPT was 37 percent faster at completing their tasks that those who didn’t, without sacrificing their quality of work. In another part of the experiment, test takers were asked to work independently in the first round, and then with Chat-GPT as an aid in the second round. They saw that the subjects excelled with the help of Chat-GPT in terms of writing quality, content quality and originality.

Besides improving productivity in writing and editing, Chat-GPT can also help specific areas like marketing analysis and programming.

Vivian Lyu, a current student at New York University (NYU) majoring in business and minoring in mathematics and computer science, has profound experience on working with Chat-GPT. She said she uses the program to help her figure out how to compute the whole landscape of the Chinese toy market and estimate the market trends over the next few years.

“I ask Chat-GPT to provide some kind of valuation for the market and what market share of brands and the weaknesses of the key players, what are their driving factors in industry, and what are the technologies,” Lyu added.

Academic Plagiarism

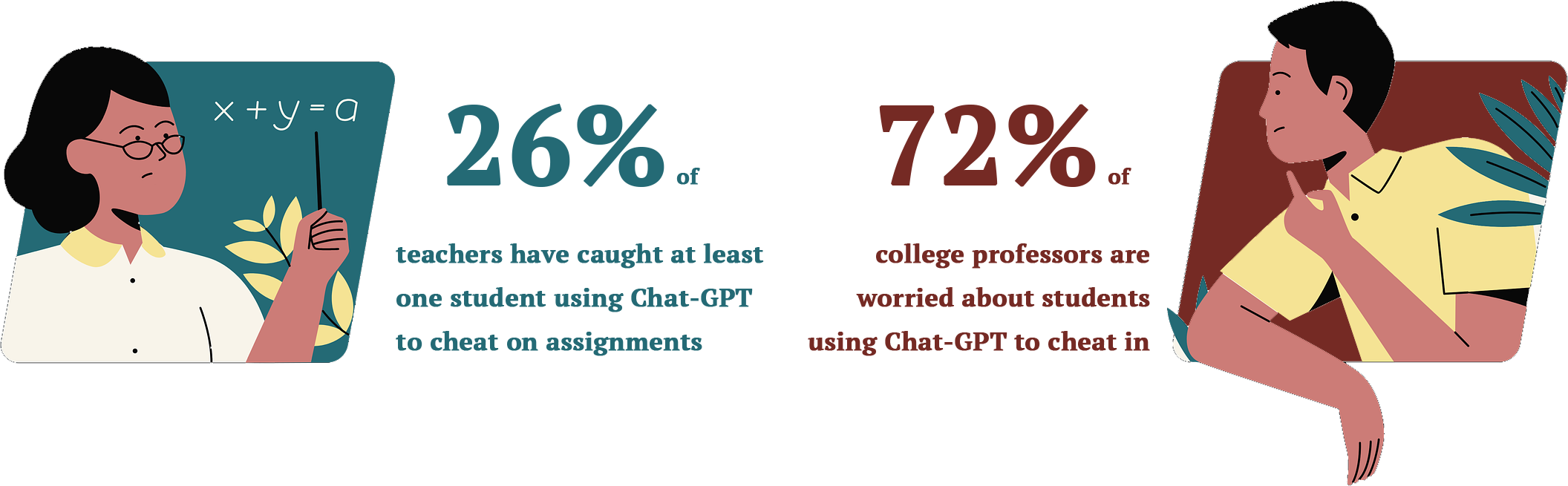

The Chat-GPT boom has brought a serious problem: academic plagiarism. According to a survey from Study.com on American K-12 schools, 26 percent of teachers have caught at least one student using Chat-GPT to cheat on assignments. Meanwhile, another survey about college educators illustrated that 72 percent of college professors are worried about students using Chat-GPT to cheat in schools. The problem with academic plagiarism in relation to Chat-GPT has permeated to all levels of education.

“It is toxic. In my class, I won’t allow my students to use it,” said Sylviasy Yang, a former high school English lecturer in Suzhou, China, who strongly opposes the usage of Chat-GPT in any kinds of assignments and tests related to her class.

“How can you ask for answers and help during the test? It’s so weird and definitely cheating,” Yang said with strong emotion. “It’s not the way to study. If you really need AI’s help, just use it to revise your grammar and find academic sources.”

Alexander Davidson, an English teacher at the University of Detroit Jesuit High School showed his worries that the students may neglect writing and communication once they rely too much on Chat-GPT, quoted in a CBS Detroit report.

Chat-GPT, like its full name Chat Generative Pre-training Transformer, is a type of generative AI which can do just about whatever you ask it to do, like writing, drawing, calculating and even coding. Chat-GPT4, the latest iteration, can even accept image and text inputs. In this way, students do not even need to learn how to ask the program specific prompts and can instead just send documents to Chat-GPT4 and wait for an answer.

Early this year, OpenAI released its API services which allow third parties to connect to OpenAI’s cloud service. An API service allows people to access data on Open AI. As a result, thousands of studios and companies were scrambling to collect this data to use for their own organisations.

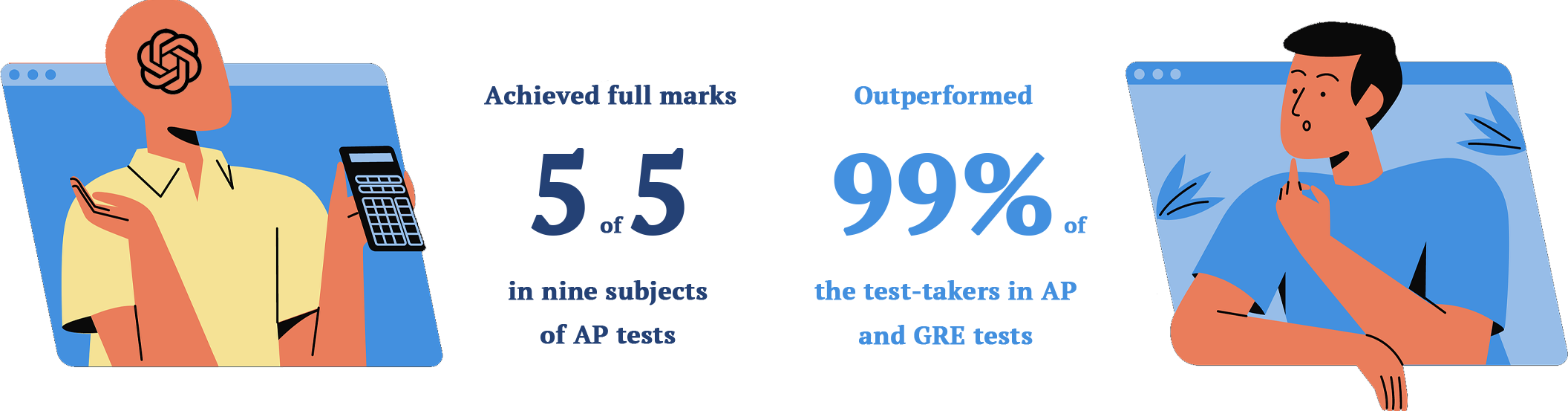

For AP tests, a college-level exam offered to high school students who want to boost their GPA and earn college credits, Chat-GPT4 achieved full marks in nine of the subjects. Meanwhile, in GRE tests, a standardised test for graduate applications, Chat-GPT4 achieved 169 of 170 in the verbal part, ranking at the 99 percentile of test-takers. It means that Chat-GPT4 outperformed most of the test-takers in the AP and GRE tests. Such a strong academic performance is indeed to spread anxiety among educators.

Yang expressed her deep concerns that parents may not be ready to embrace the AI instructors to teach their children.

“It sounds weird right? We train robots and let robots train our next generation,” she said.

AI Will Dramatically Transform Jobs

However, problem-solving ability is just the tip of the iceberg of generative AI. At this moment, there are many voices that have also expressed their worries about whether AI will potentially replace human’s jobs.

“Because the Chat-GPT4 is able to read text, image, and anything you give it, so I think it can replace some of the jobs potentially right now just like as a tutor,” Culshaw said. “You can just give text to Chat-GPT and it can give you a better answer and a more signalised one.”

Culshaw believes that powerful generative AI like Chat-GPT already have the ability to replace entry-level occupations. Especially with beginning-level coders, Culshaw believes that AI can easily replace people who only have basic coding skills.

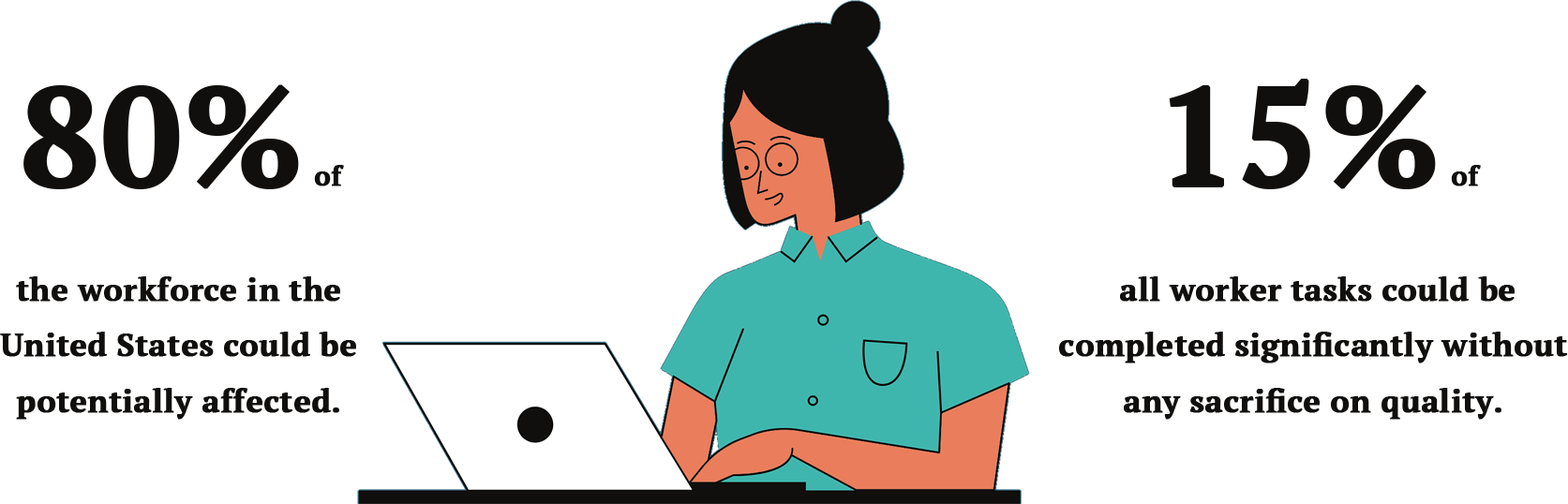

The researchers from OpenAI invited 1,016 occupations to join their experiment and found that around 80 percent of the workforce in the United States could be potentially affected. The biggest challenge is considered to come from the growth of large language models (LLMs). LLMs are built upon data sets based on large quantities of text datasets. The significantly larger pool of data in training means a significant increase in capabilities of AI, and that’s why LLMs can stand out from so many computer models. The pool of dataset in GPT2 is 1.5 billion while GPT3 has 175 billion. Although OpenAI did not disclose the size of GPT4, OpenAi claimed that the new model “exhibits human-level performance on various professional and academic benchmarks.”

In this research, those jobs that are related to accounting, editing, financial analysis, graphic design, entry-level coding and data analysis are most likely to be replaced by Chat-GPT.

According to OpenAI’s research, with access to a large language model, about 15 percent of all worker tasks could be completed significantly without any sacrifice on quality. They also estimated that if there was more growth in large language models, the share could level up to 47 to 56 percent.

“Yes, there are some jobs that AI can take responsibility for those actions,” Baris Duru said. “Some job opportunities are going to decrease, but at the same time AI will produce other job opportunities.”

Duru is a HKU alumni currently seeking full-time employment in Hong Kong. He believes that AI will replace low quality jobs, but it won’t be able to take over all jobs. “Because high quality means high responsibilities and we still have humans to take those responsibilities against AI,”Duru said.

Culshaw made a similar statement. “I don’t think that Chat-GPT has anything to do with biological science because it cannot carry out any evolution experiments.”

Ave believes that the current AI has many flaws, such as making decisions without human guidance. “I don’t think it is intelligent enough to replace humans a lot,” she said. “I don’t think it can do better because we have to design things, we have to make decisions, and we have to keep it in mind of a lot of effects. I don’t think ChatGPT has that level of intelligent cognitive ability to make those decisions.”

Can high quality work really avoid being eliminated by AI? The answer may be no. According to the research by OpenAI, scientists provided a table which listed those jobs which are more likely to be replaced by AI, while some of them require college diplomas and even higher. Mathematicians, tax preparers, financial quantitative analysts, writers and authors, web and digital interface designers and many others have very high exposure scores, which means GPTs and GPT-driven software are able to save these kinds of professionals a significant amount of work time.

On the other hand, OpenAI also listed some occupations that are less likely to be taken place by AI, such as agricultural equipment operators, athletes and sports competitors, bus and truck mechanics, and cooks. It seems that manual workers will be hard to replace with AI.

Will AI Seek Power For Itself?

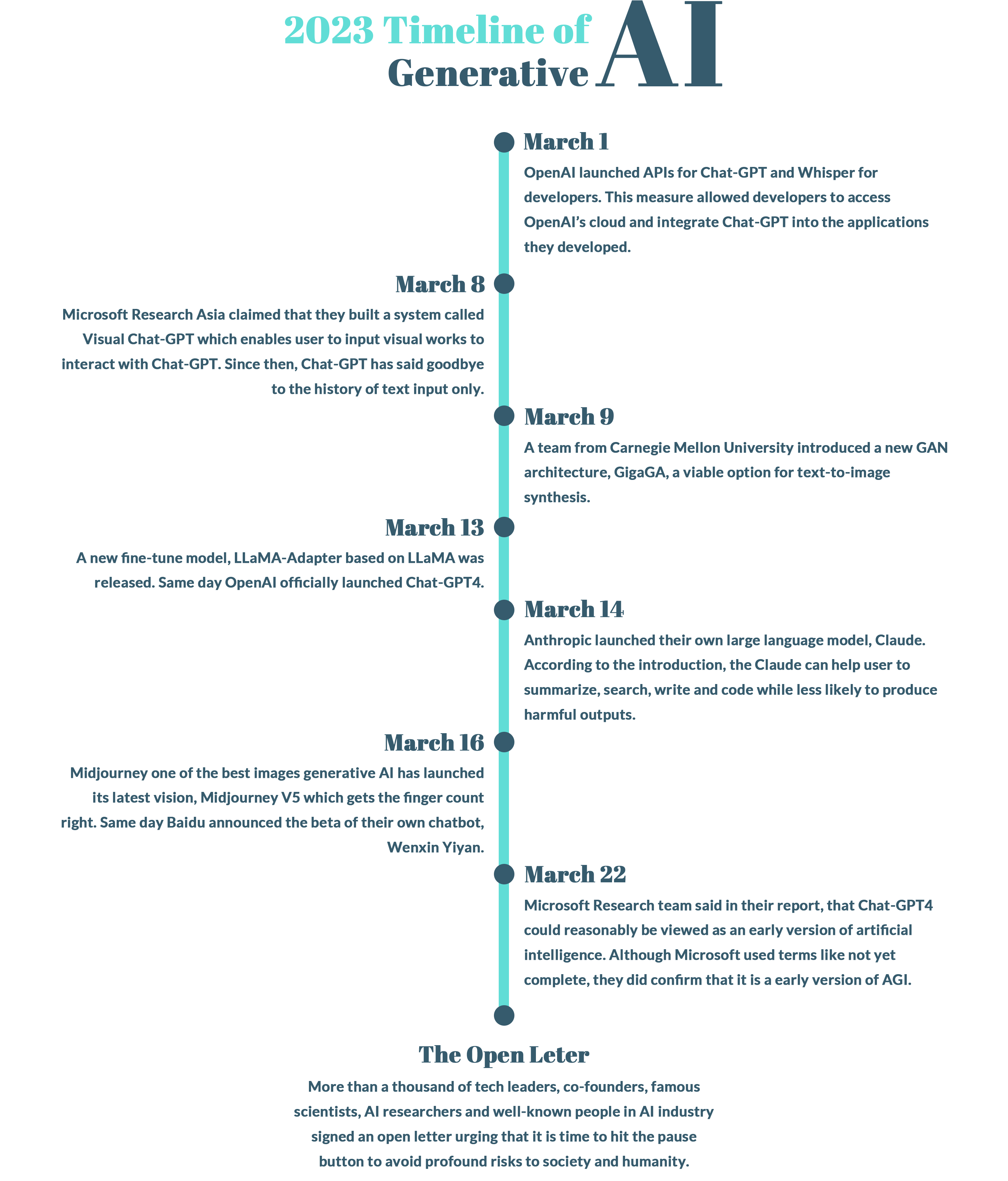

This March, the world has been experiencing a big bang in generative AI. Once the world witnessed how powerful Chat-GPT is, more and more tech giants and start-ups began joined this involute race. Chat-GPT is like the small butterfly in the principle of butterfly effect.

The butterfly effect implies that a small change in initial conditions can result in a large difference in the future. This term was founded by an American mathematician and meteorologist Edward Lorenz who said, “the nonlinear equations that govern the weather have such an incredible sensitivity to initial conditions, that a butterfly flapping its wings in Brazil could set off a tornado in Texas.”

In human history, there are several remarkable incidents that could be explained by the butterfly effect. The assassination of Archduke Franz Ferdinand as the trigger of World War I is an example of the butterfly effect. Now, the butterfly in the AI industry has begun to flap its wings.

On March 22, more than a thousand of tech leaders, tech companies cofounders, well-known scientists and AI industrial pioneers led by Elon Musk, co-founder of SpaceX and Tesla, signed an open letter urging that it is time to hit the pause button to avoid profound risks of AI to society and humanity.

Screenshot of the Open letter with over 30000 signatures for it. (Source: Future of Life Institute)

In this open letter, the initiators described current great leaps in AI as an out-of-control race and said, “no one, not even their creators can understand, predict, or reliably control.” After four soul-stirring questions, the initiators called on all AI labs to immediately pause the training of AI systems more powerful than GPT-4 for at least six months.

The open letter attributed an essay, X-Risk Analysis for AI Research, which generally listed eight speculative hazards and failure modes including all aspects of human society. One of the scenarios estimated an intriguing situation called power-seeking behaviour, which is described as “a concern because power helps agents pursue their goals more effectively, and there are strong incentives to create agents that can accomplish a broad set of goals.” When we look back at the development of nuclear power and compare it with AI, we can find several similarities between them.

After August of 1945, the world witnessed the power of nuclear weapons and realised the importance of developing nuclear technology. Shortly after the success of the Manhattan Project, the Soviet Union accelerated its atomic bomb project followed by the French, who established the Atomic Energy Commission aiming to investigate military usage of atomic energy. According to the United Nations, during the Cold War, the number of nuclear weapons increased from 3,000 in 1955 to over 60,000 in the late 1980s.

The rapidly expanding number of nuclear weapons illustrated the swellingly expanding ambition of political goals. While the nuclear deterrent forbade world war to some degree, the superpowers have taken this opportunity to control the global discourse. Humans, as the inviters of nuclear power, have become the slave of the nuclear power.

Ironically, on the other side of the mirror, those tech giants have repeated a new race in the AI industry without any hidden desire for power seeking. What is certain is that they won’t stop their leaps this time.

Now, Elon Musk has decided to start a new AI company, X.AI, to completely rival OpenAI. Will Musk’s company just add another fire in the AI race, or does it just reflect the power-seeking behaviour? The answer is unclear for now.

Extra Credits

Advisor Keith Richburg

Editorial Director Hailey Yip

Multimedia Director Madeleine Mak

Multimedia Producer Wulfric Zhang

Illustrator Wulfric Zhang

Copy Editor Hugo Novales

Fact Checker Larissa Gao